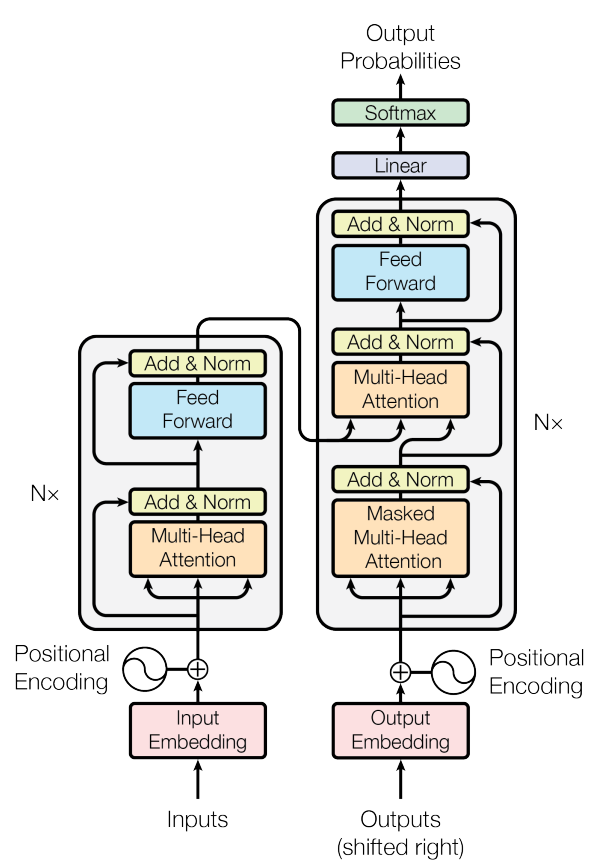

Credit: Vaswani et al.

Credit: Vaswani et al.

Date

Mar 15, 2022 6:00 PM — 7:00 PM

Nisreen explained the technical aspects of attention and self attention mechanisms, as well as explored how attention is used in the transformer architecture in order to aid in machine translation tasks.

Supplemental Resources

Neural Machine Translation by Jointly Learning to Align and Translate, Bahdanau et al.

Attention is all you Need, Vaswani et al.