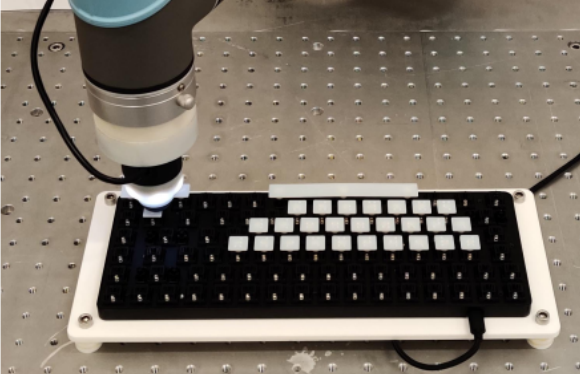

Credit: Church et al. 2020

Credit: Church et al. 2020

Speaker(s): Sean Stapleton

Topic: GPT-3 and its Implications

In recent years, we’ve seen natural language processing (NLP) performance accelerate drastically across a number of tasks, including text completion, machine translation, and question answering. Much of this performance gain has been attributed to two trends in the NLP community, namely the introduction of transformers, and the increase in model size (and consequent need for intense computational power). Capitalizing on these trends, OpenAI recently released a transformer-based model called GPT-3 with 175 billion parameters, that was trained on roughly 500 billion tokens scraped from the internet. This MSAIL discussion focused predominantly on three questions addressed in the paper:

- Does a substantial increase in model size actually lead to better performance in downstream tasks?

- Can language models effectively model intelligent and adaptable thought?

- What are the biases and risks associated with training a language model on the entire internet?

Sean also covered the transformer and GPT-3 model architectures, though the focus of the discussion was not on this aspect of the paper.

You can find the recording of this talk here.

Supplemental Resources

Papers:

Language Models are Few-Shot Learners (Brown et al.)