Harmful Bias in Natural Language Generation

Credit: Sheng et al.

Credit: Sheng et al.

Date

Mar 30, 2021 6:00 PM — 7:00 PM

Speaker(s): Yashmeet Gambhir

Topic: Harmful Bias in Natural Language Generation

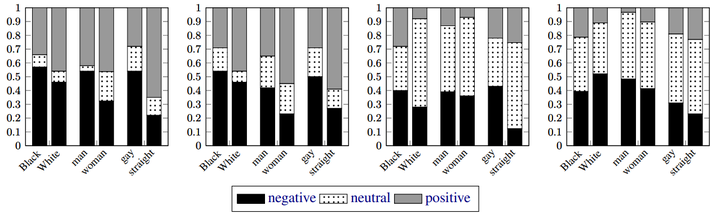

Large language models have taken over the NLP scene and have led to a surge of state-of-art development in natural language generation tasks (machine translation, story generation, chatbots, etc.). However, these models have been shown to reflect many harmful societal biases that exist in text around the internet. This talk will go over two major papers studying harmful bias in large LMs: the first identifies and quantifies this bias, the second will attempt to mitigate bias.

Supplemental Resources

Papers:

The Woman worked as a Babysitter: On Biases in Language Generation

Towards Controllable Biases in Language Generation